Since now we have an up-to-date inventory with your VMs and LXCs properly tagged and therefore sorted in groups, we can create our first patching job. This one will be for a single Debian host.

Create a .yml file for Debian patching in Gitea

- In your existing ansible playbooks Gitea repo, create a new file.

- What does this one do?

- Performs minor updates only (not major OS updates like from Bookworm to Trixie!)

- Cleans up unused packages

- Reboots if there is a kernel update OR if uptime is > 90 days

- Application of this template: It is suitable for single hosts that are not running in a cluster and have no dependency on one another.

# patch_debian_single_hosts.yml

---

- name: Patch Debian-based Systems

hosts: debian_hosts

become: true # Elevate permissions (execute with sudo)

tasks:

- name: Update apt repo and cache

ansible.builtin.apt:

update_cache: yes

force_apt_get: yes

cache_valid_time: 3600

- name: Upgrade all apt packages

ansible.builtin.apt:

upgrade: dist

autoremove: yes

autoclean: yes

- name: Check if a reboot is required (kernel/libs)

ansible.builtin.stat:

path: /var/run/reboot-required

register: reboot_required_file

- name: Check if uptime is less than 90 days

ansible.builtin.assert:

that: (ansible_uptime_seconds | int) < 7776000 # (90*24*60*60)

fail_msg: "Uptime ({{ (ansible_uptime_seconds / 86400) | round(1) }} days) is over 90 days. Forcing reboot."

quiet: true

# This task will "fail" if uptime is > 90 days

# We use 'ignore_errors: true' so the playbook continues

register: uptime_check

ignore_errors: true

- name: Reboot the server if (kernel needs it) OR (uptime > 90 days)

ansible.builtin.reboot:

msg: "Rebooting server after Ansible patch run"

connect_timeout: 5

reboot_timeout: 300

post_reboot_delay: 30

test_command: whoami

when: reboot_required_file.stat.exists or uptime_check.failed

- Once uploaded in Gitea (or your preferred source version control system), sync your Playbook – Simply go to Resources → Projects and sync your Ansible Playbooks project. This will ensure that the new file is accessible to AWX (it pulls a local copy).

Add your SSH credentials

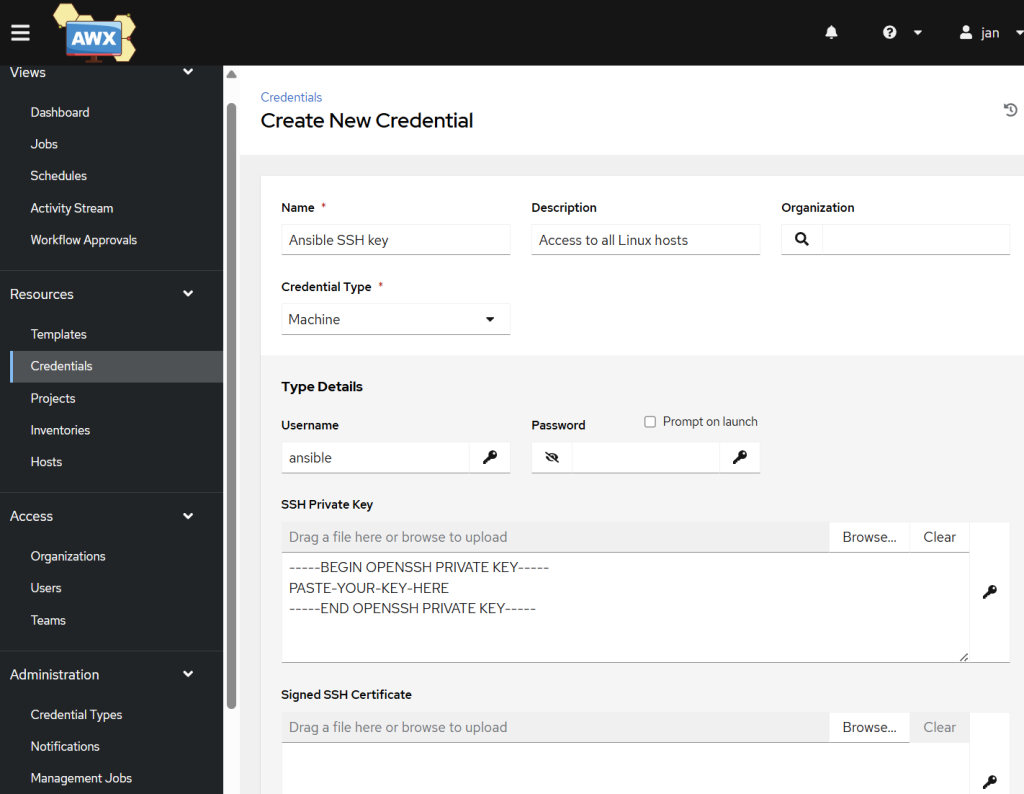

Firstly, we will need to share the SSH key that we created much earlier (the one that can reach every host in a passwordless manner) and add it into the credentials store.

- In AWX, go to Resources → Credentials and add a new one.

- Name:

Ansible SSH key(or whatever you like) - Credential Type:

Machine - Username:

ansible - SSH Private key: paste in your SSH private part of the key

- Leave all else blank

Create the Job Template

The host is not reachable from the Kubernetes task pod – remember we are referring to the FQDN hostname (such as server1.mydomain.tld), not the direct IP address. Have you made it possible for the cluster to reach your LAN? See the earlier steps. You can also manually define the particular hostname in CoreDNS.

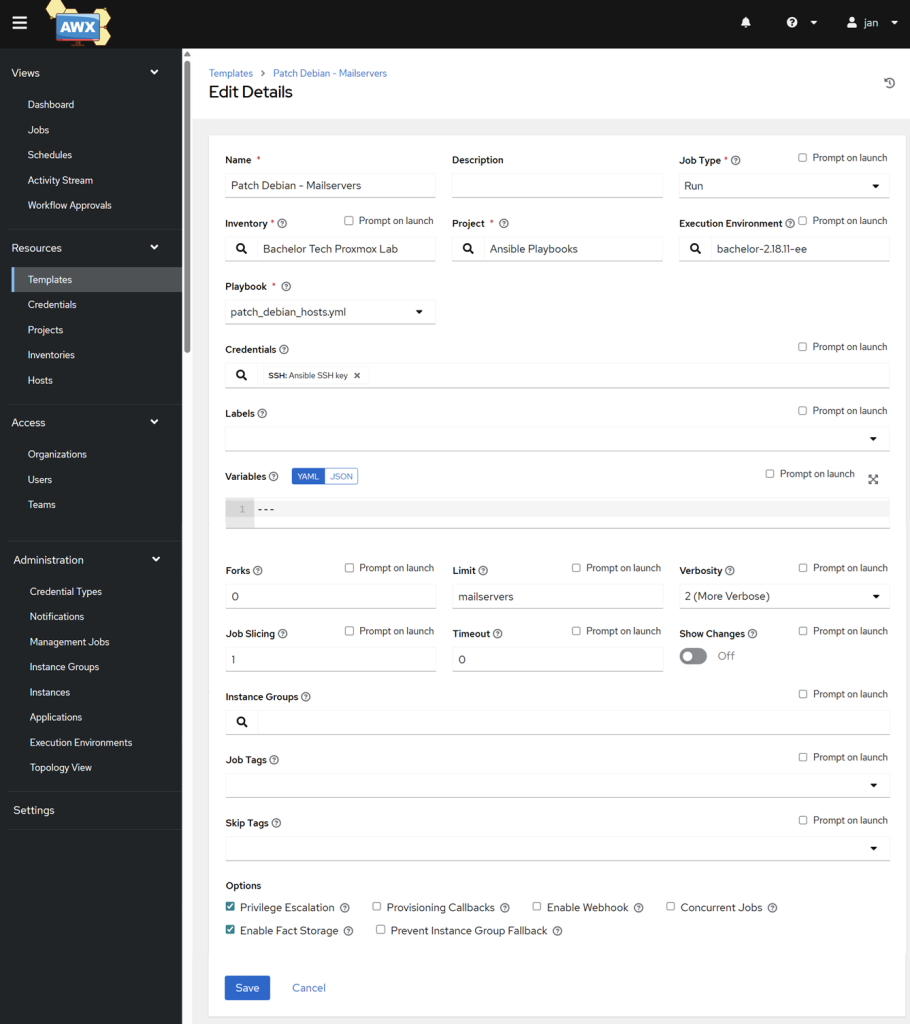

This is the “Launch” button for your patch job. Go to Resources → Templates and click on Click on Add → Add job template. Fill it in as follows:

- Name:

Patch Debian ServersInventory: Your Proxmox inventoryProject:Ansible Playbooks(Your Gitea project)Execution Environment: (Your custom EE)Playbook: In the dropdown, you should findpatch_debian_hosts.yml. Select it.Credentials: Click on the 🔍 and select yourAnsible SSH Key(the one you made with your SSH private key).In the lower middle section called Limit, add a group that refer to a standalone or a test server that you would like to test the template on. In my case, it was a group calledmailservers. Otherwise the template would be applied to ALL servers marked asdebian_hosts, which is not desirable in production.Check the Privilege Escalation box: This is required for allowingbecome: true(run as root) to work. Save it.

- At this point our templating does not include snapshots, so before you run this job, take a snapshot of your VM or LXC in Proxmox.

- Back on the Templates page, click on the Launch icon (the rocket 🚀) next to your new

Patch Debian Serverstemplate to start the job. - Observe the magic happen and confirm whether the host got rebooted (which would happen on a kernel change or uptime > 90 days).

- In case the job fails, check the logs. This could be because:

- There is no response on the given SSH port – is your host’s SSH daemon listening on that port? Check it on that host by running nano

/etc/sshd/sshd_config. - The host is not reachable from the Kubernetes task pod – remember we are referring to the

- FQDN hostname (such as server1.mydomain.tld), not the direct IP address. Have you made it possible for the cluster to reach your LAN? See the earlier steps. You can also manually define the particular hostname in

CoreDNS.

- There is no response on the given SSH port – is your host’s SSH daemon listening on that port? Check it on that host by running nano

Good job! What other templates can we run? And how about creating a snapshot template just in case the update does not go as planned?